Testing a Greenfield Microservice

There are no universal rules for choosing a method for testing. You have to optimize for what is important to you. While the test pyramid might be a good fit for more stable systems, our development team decided to avoid writing unit tests and rely on more black-box API tests for our new microservice. We chose this approach to optimize for easy and safe rewrites which we expect to happen at the early stages of the microservice. In this blog post, I'll run you through our thinking process and explain how we came to this conclusion about testing our greenfield microservice.

Choosing a Testing Method

A year ago, our team extracted a core feature of our product, the part that creates ads to Facebook, from a monolith to a new microservice. After working with legacy code, we suddenly had a greenfield project, where we could do things right from the get-go, follow best practices, and define testing standards from scratch. It soon became clear, though, that our team didn’t share a common vision of what would be the perfect way to do testing for our microservice.

Some of us, including me, thought that we should follow textbook testing practices now that we had a chance. In other words, we should follow the test pyramid’s proportions of finer and coarser-grained tests. Other team members believed that it made more sense to rely on API tests solely. To reach a decision, we debated the topic in our team retrospective. In the retro, we examined the typical pros and cons of API tests and unit tests, and then discussed which tradeoffs we could live with. I have summarized the benefits and disadvantages of the different testing methods below.

Textbook Pros and Cons of API Tests and Unit Tests

Unit Tests

- Ensure the API contract is held to give good confidence that code works as intended.

- No need to change API tests when refactoring or rewriting functionality, which makes API tests a useful safety harness.

- Slower to run, as setting up the full service with databases and other dependencies takes time.

- If a test is failing, it typically takes more time to pinpoint what is broken.

- Doesn’t affect code design, since it’s treating the system as a black box.

- Since API tests are treating the system as a black box, they should not require a lot of mocking, other than some external services. Still, testing corner cases leads to more setup code than when testing the same corner case in isolation in a unit test.

API Tests

- With only unit tests, the test suite may pass while the feature is broken, which can happen if a failure occurs in the interface between modules.

- Since unit tests are more tied to the implementation, the tests might need updating when you’re refactoring code. Tests may even become useless when rewriting some functionality. This is why unit tests don’t provide as good of a safety harness for refactoring as API tests.

- Fast to run, hence provide a faster feedback cycle for development.

- Usually easy to pinpoint what is causing a unit test to fail.

- Writing code that is easily unit testable can help in designing cleaner code, for example, with fewer side effects and cleaner interfaces.

- Depending on how strict you want to be about the level of isolation of unit tests, they may require a lot of mocking, which, in the worst case, makes them slow to write.

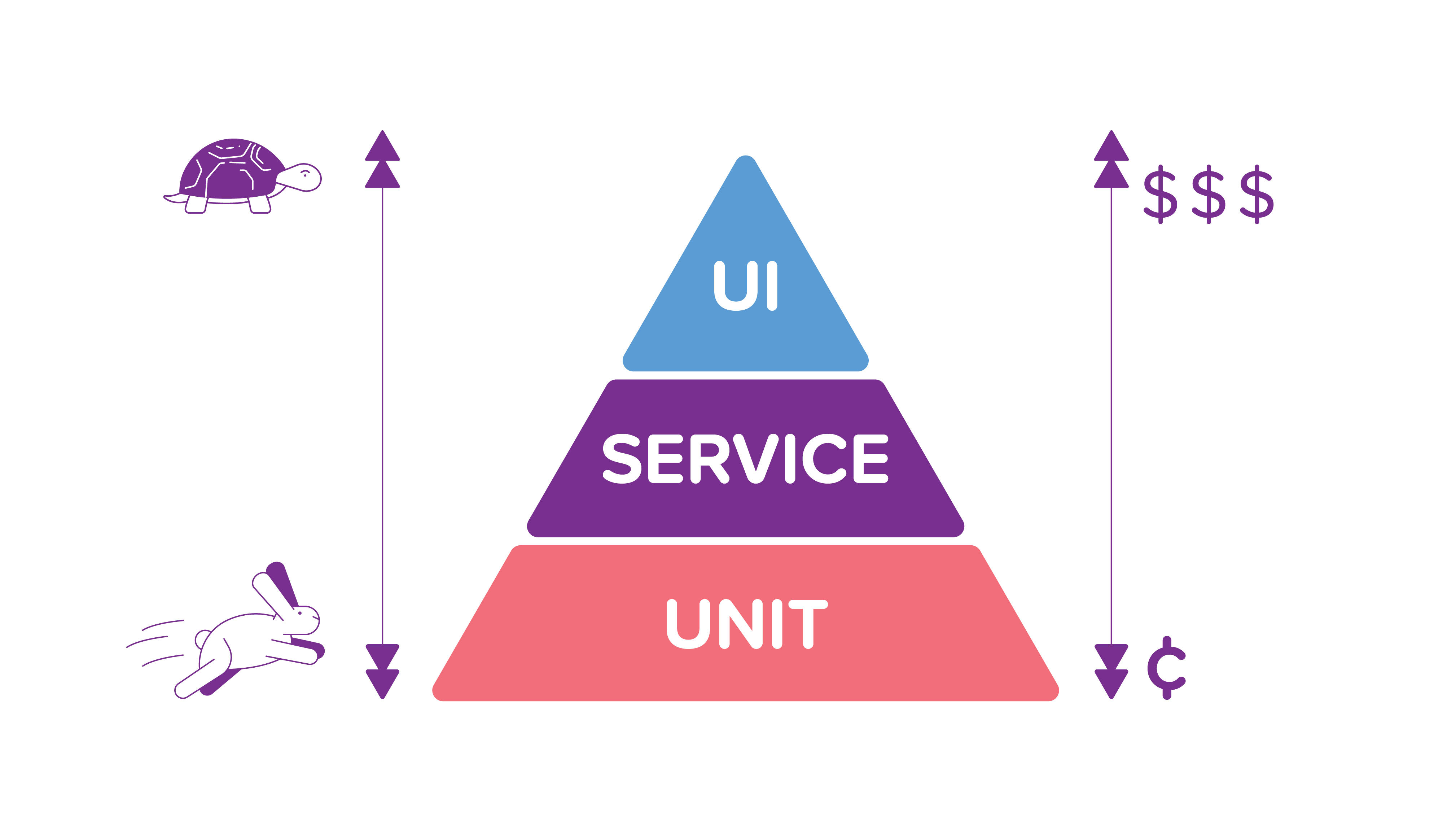

Test Pyramid

Of course, we aren’t restricted to only one type of tests, be it on the unit, API, integration or UI level, or something completely different. Most projects will have many types of tests in varying amounts. A common way of thinking of a sensible distribution of lower and higher-level tests is the test pyramid.

The concept was popularized as the Test Automation Pyramid by Mike Cohn in his book Succeeding with Agile. The main idea behind this is that you should have more tests with a higher granularity that are fast to run, and fewer tests with more coarse abstraction level that are typically slower to run.

Testing on different abstraction levels shouldn’t mean having testing duplication, for example, testing the same thing in API tests and unit tests. In his blog post The Practical Test Pyramid, Ham Vocke recommends deleting higher-level tests cases that are already covered by lower-level tests. He argues that higher-level tests should focus on testing the functionality that isn’t possible to test by lower-level tests.

The test pyramid as illustrated by Martin Fowler.

Optimizing for Easy Rewrites

In the end, we had to pick our poison. After carefully considering the pros and cons of API and unit testing, we decided to optimize our testing approach for painless rewrites. In our case, this meant being able to rewrite the part of the code while not having to rewrite the tests at the same time.

The winning argument for sticking to API tests was that as our service is still relatively young, significant architectural changes are expected. In rewrites and refactorings, it's essential to guarantee that the functionality doesn’t change. API tests are a good fit here since they ensure that the API contract is held and will only need changing when the API contract itself changes. Unit tests, on the other hand, are likely to become useless in rewrites, when the code they were testing no longer exists.

We have some early examples that seem to prove our hypothesis. Our neighboring team, Bobby Tables, who owns the reporting functionality of our app, has already done several rewrites to the pipeline that is used to query reporting data. They are happy about not having had too many unit tests since it would have been more cumbersome to keep the tests on par with the new code while safeguarding that the new code works as it should.

Dealing with the Issues API Tests Spawn

All solutions have their flaws, and so do API tests. Now that we’ve adopted our approach, we’re trying to mitigate the problems generated by the disadvantages of our chosen method: slowness, flakiness, and duplicate manual work.

Speeding Up API Tests

API tests require setting up the microservice and all its dependencies, such as databases and worker processes. A simple way to work around the performance issue is to run the tests in parallel. Team Bobby Tables did this and managed to bring the API test execution time down from around ten minutes to one minute.

However, as with any performance issues, you should first find the root cause—what is it that slows the code down—and try to fix the bottleneck before reconsidering the whole testing approach and going all-in with unit tests.

One possible issue can be that the setup for each test is too heavy. Dealing with global application state, for example in the database, is difficult, and we could have chosen to clean up the database between each test run to be able to always start with a clean slate. Still, this will make the test setup slower and also will make it impossible to run tests in parallel that are using the same database. In our microservice, we don’t clean up the database between tests. Instead, we try to be careful with global state shared between tests since we don’t want our API tests to be flaky.

Making API Tests Less Flaky

Because they have a broader scope, API tests are more likely to share global state and so are more likely to become flaky. Flakiness can result from, for example, tests sharing the same database and accidentally using the same data.

To avoid flakiness, we aim to only use randomly generated data—instead of hardcoded data—in any of the test data’s identifiers. For example, we might get flaky test results with the following test data, if multiple tests were using the same hardcoded videoId but with different uploadStatus, and if that data was written into the database.

To mitigate this risk, we would only use randomly generated videoId’s:

All the tests need to be written with the thought in mind that there may be any data in the database, which means tests asserting things like the number of videos stored in a database won’t work. You should only assert data that was inserted by your test.

Reduce Duplicate Work

One main issue we face with our monolith’s API tests is that adding or removing properties to centrally used data models, such as accounts or ad campaigns, may lead to having to add that same property to 200 tests’ test data. Luckily, we can easily avoid this issue if we use factories to generate all the test data from the start. Then we only need to make changes to data structures in one place: the factory class for the data model that is in the making.

When Should We Write Unit Tests?

We try to avoid getting too married with either API or unit tests, and instead, keep our options open for when our reality changes. If at some point we find ourselves spending too much energy on mocking or writing setup code, it may make sense to start writing tests on a different abstraction level.

For example, team Bobby Tables has begun writing unit tests to test particular corner cases more thoroughly. Testing these corner cases in API tests while covering all the combinations through modifying the API parameters would have resulted in lots of repetitive test code, whereas in a unit test the combinations they want to test are simply different arguments to a function. The unit tested parts are mostly stateless data transformation functions, which also makes them easy to unit test. The tradeoff is that when we rewrite the unit tested part, we need to rewrite the tests as well.

We also expect that black box testing a system with more responsibilities will be more complicated than testing a more narrowly scoped microservice. In our case, our team’s Facebook ads microservice has a smaller scope than the monolith we extracted it from, which leads to the API test setup required by the monolith being a lot longer than that of the microservice, even when testing the same cases. In a way, the API tests of the microservice could be seen as component tests of single modules of a monolith.

So if the test setup starts becoming too complex due to the system having too many responsibilities, it may be our cue to start testing on a lower level. For loosely coupled functionality with clean interfaces, like in the case of a modular monolith, we can write tests for those components in isolation. If we later extract the module as a new microservice, those component tests can serve as useful documentation on the requirements of the new microservice.

Testing Interoperability Between Services

A microservice architecture adds its own problems to the mix. Testing that everything works together in a microservices architecture is more difficult because the test setup needs to have multiple services running simultaneously. We aren’t running these kinds of large scale tests that include running several microservices that would ensure the interoperability between the monolith and our Facebook ads microservice. Our microservices are mocked out in the monoliths tests.

To tackle this problem, we have found it invaluable to enforce rigorous OpenAPI specifications that define the schema of the data passed to and returned by our microservice’s endpoints. A request will fail if the data doesn’t match the expected form, which allows us to rely on the input data. The verification is done on both the monolith and the microservice.

Apart from running verifications in production, we also run them in the automated tests. So even if the microservice is mocked in the monoliths API tests, the tests guarantee that the data passed to the mock and the canned response returned by it are in the correct form. Therefore forgetting to update either the data passed to the mock or the canned response returned by it gets caught by the tests as the microservices OpenAPI specification is updated. We also rely heavily on our monitoring system, and alerts for relevant metrics, which has enabled us to react quickly is something goes wrong.

Learning: System Maturity Counts

My biggest learning from the debates we had as a team was that the optimal way to test systems depends on the maturity of the system. In the case of our rather new microservice, it makes sense to rely on more coarse-grained tests to make the system more adaptable to change. As the system matures and becomes more complex, it starts to make sense to invest in unit tests and eventually have fine and coarse-grained tests, possibly in the proportions suggested by the test pyramid.

.jpg)